[DISCUSSION] refining usage of numberofcores in CarbonProperties

[DISCUSSION] refining usage of numberofcores in CarbonProperties

|

This post was updated on .

1. many places use the function 'getNumOfCores' of CarbonProperties which

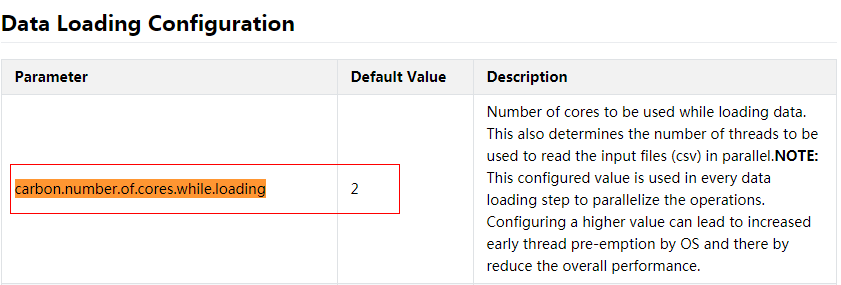

returns the loading cores. 2. so if we still use the value in scene like 'query' or 'compaction' , it will be confused. and i start the pr 2907 <https://github.com/apache/carbondata/pull/2907> when loading data,the loading cores will be changed not the default value if the property 'carbon.number.of.cores.while.loading' unset, and the value will be set in CarbonProperties which is singleton, the code: // get the value of 'spark.executor.cores' from spark conf, default value is 1 val sparkExecutorCores = sparkSession.sparkContext.conf.get("spark.executor.cores", "1") // get the value of 'carbon.number.of.cores.while.loading' from carbon properties, // default value is the value of 'spark.executor.cores' val numCoresLoading = try { CarbonProperties.getInstance() .getProperty(CarbonCommonConstants.NUM_CORES_LOADING, sparkExecutorCores) } catch { case exc: NumberFormatException => LOGGER.error("Configured value for property " + CarbonCommonConstants.NUM_CORES_LOADING + " is wrong. Falling back to the default value " + sparkExecutorCores) sparkExecutorCores } // update the property with new value carbonProperty.addProperty(CarbonCommonConstants.NUM_CORES_LOADING, numCoresLoading) so the description in the document for 'carbon.number.of.cores.while.loading' is wrong: carbon document  and the value 'cores' of compation or partition should be dealed with the same using 'spark.executor.cores'? -- Sent from: http://apache-carbondata-dev-mailing-list-archive.1130556.n5.nabble.com/ |

Re: [DISCUSSION] refining usage of numberofcores in CarbonProperties

|

I think you are talking about 2 problems.

The first is that:We have some configurations about numberOfCores such as cores for Loading/Compaction/AlterPartition, currently they use the same method to get the configured value which means they all are actually using the numberOfCoresWhileLoading. And your PR#2907 is to fix this problem. But there is another problem: For data loading, in the document we say it will use 2 cores by default if the numberOfCoresWhileLoading is not configured. But actually in our code, we are using the numberOfCoresForCurrentExecutor as the default value. And this problem is not resolved in your PR#2907 yet. To fix the 2nd problem, my suggestion is as below: 1. You can update the document for the default value of numOfCoresWhileLoading. The default value will be the 'spark.executor.cores'. 2. At the same time I think you should also optimize the default value of numOfCoresWhileCompation. To keep the behavior the same, the default value should also be 'spark.executor.cores'. -- This requires the modification of the document as well as the code. -- Sent from: http://apache-carbondata-dev-mailing-list-archive.1130556.n5.nabble.com/ |

Re: [DISCUSSION] refining usage of numberofcores in CarbonProperties

|

In reply to this post by lianganping

In addition to the last mail, for the numCoresOfAlterPartition, you can

handle it similarly. Please remember to fix these in another PR, not in PR#2907. -- Sent from: http://apache-carbondata-dev-mailing-list-archive.1130556.n5.nabble.com/ |

«

Return to Apache CarbonData Dev Mailing List archive

|

1 view|%1 views

| Free forum by Nabble | Edit this page |