Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

Hello Team,

We performed the following scenario, - Created a hive table as *unitdatahive* as below, *CREATE TABLE uniqdatahive (CUST_ID int,CUST_NAME String,ACTIVE_EMUI_VERSION string, DOB timestamp, DOJ timestamp, BIGINT_COLUMN1 bigint,BIGINT_COLUMN2 bigint,DECIMAL_COLUMN1 decimal(30,10), DECIMAL_COLUMN2 decimal(36,10),Double_COLUMN1 double, Double_COLUMN2 double,INTEGER_COLUMN1 int) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' STORED AS TEXTFILE;* - Created a carbon file as *uniqdata* as below, *CREATE TABLE uniqdatahive1 (CUST_ID int,CUST_NAME String,ACTIVE_EMUI_VERSION string, DOB timestamp, DOJ timestamp, BIGINT_COLUMN1 bigint,BIGINT_COLUMN2 bigint,DECIMAL_COLUMN1 decimal(30,10), DECIMAL_COLUMN2 decimal(36,10),Double_COLUMN1 double, Double_COLUMN2 double,INTEGER_COLUMN1 int) STORED BY 'org.apache.carbondata.format' TBLPROPERTIES ("TABLE_BLOCKSIZE"= "256 MB");* - Loaded the 2013 records into hive table uniqdatahive as below, *LOAD DATA inpath 'hdfs://hadoop-master:54311/data/2000_UniqData_tabdelm.csv' OVERWRITE INTO TABLE uniqdatahive;* - Inserted around 7000 records into carbon table with 3 runs of the following query, *insert into table uniqdata select * from uniqdatahive;* - After 7000 records stored in carbon table, the following exception began to appear in every run of the insert query again and again, 0: jdbc:hive2://hadoop-master:10000> *insert into table uniqdata select * from uniqdatahive;* Error: org.apache.spark.SparkException: Job aborted due to stage failure: Task 1 in stage 42.0 failed 4 times, most recent failure: Lost task 1.3 in stage 42.0 (TID 125, hadoop-slave-1): *org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.AlreadyBeingCreatedException)*: *Failed to APPEND_FILE* /user/hive/warehouse/carbon.store/hivetest/uniqdata/Metadata/04936ba3-dbb6-45c2-8858-d9ed864034c1.dict for DFSClient_NONMAPREDUCE_-1303665369_59 on 192.168.2.130 *because DFSClient_NONMAPREDUCE_-1303665369_59 is already the current lease holder.* at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.recoverLeaseInternal(FSNamesystem.java:2882) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInternal(FSNamesystem.java:2683) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInt(FSNamesystem.java:2982) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFile(FSNamesystem.java:2950) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.append(NameNodeRpcServer.java:654) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.append(ClientNamenodeProtocolServerSideTranslatorPB.java:421) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043) *After this we are unable to insert more than 7000 records in the carbon table from hive table.* Thank You Best Regards | *Harsh Sharma* Sr. Software Consultant Facebook <https://www.facebook.com/harsh.sharma.161446> | Twitter <https://twitter.com/harsh_sharma5> | Linked In <https://www.linkedin.com/in/harsh-sharma-0a08a1b0?trk=hp-identity-name> [hidden email] Skype*: khandal60* *+91-8447307237* |

Re: Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

A minor update in the email, carbon table uniqdata was generated with the

below query, *CREATE TABLE uniqdata (CUST_ID int,CUST_NAME String,ACTIVE_EMUI_VERSION string, DOB timestamp, DOJ timestamp, BIGINT_COLUMN1 bigint,BIGINT_COLUMN2 bigint,DECIMAL_COLUMN1 decimal(30,10), DECIMAL_COLUMN2 decimal(36,10),Double_COLUMN1 double, Double_COLUMN2 double,INTEGER_COLUMN1 int) STORED BY 'org.apache.carbondata.format' TBLPROPERTIES ("TABLE_BLOCKSIZE"= "256 MB");* Thank You Best Regards | *Harsh Sharma* Sr. Software Consultant Facebook <https://www.facebook.com/harsh.sharma.161446> | Twitter <https://twitter.com/harsh_sharma5> | Linked In <https://www.linkedin.com/in/harsh-sharma-0a08a1b0?trk=hp-identity-name> [hidden email] Skype*: khandal60* *+91-8447307237* On Wed, Jan 4, 2017 at 10:48 AM, Harsh Sharma <[hidden email]> wrote: > Hello Team, > We performed the following scenario, > > > - Created a hive table as *unitdatahive* as below, > > *CREATE TABLE uniqdatahive (CUST_ID int,CUST_NAME > String,ACTIVE_EMUI_VERSION string, DOB timestamp, DOJ timestamp, > BIGINT_COLUMN1 bigint,BIGINT_COLUMN2 bigint,DECIMAL_COLUMN1 decimal(30,10), > DECIMAL_COLUMN2 decimal(36,10),Double_COLUMN1 double, Double_COLUMN2 > double,INTEGER_COLUMN1 int) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' > LINES TERMINATED BY '\n' STORED AS TEXTFILE;* > > > - Created a carbon file as *uniqdata* as below, > > > *CREATE TABLE uniqdatahive1 (CUST_ID int,CUST_NAME > String,ACTIVE_EMUI_VERSION string, DOB timestamp, DOJ timestamp, > BIGINT_COLUMN1 bigint,BIGINT_COLUMN2 bigint,DECIMAL_COLUMN1 decimal(30,10), > DECIMAL_COLUMN2 decimal(36,10),Double_COLUMN1 double, Double_COLUMN2 > double,INTEGER_COLUMN1 int) STORED BY 'org.apache.carbondata.format' > TBLPROPERTIES ("TABLE_BLOCKSIZE"= "256 MB");* > > > - Loaded the 2013 records into hive table uniqdatahive as below, > > > *LOAD DATA inpath > 'hdfs://hadoop-master:54311/data/2000_UniqData_tabdelm.csv' OVERWRITE INTO > TABLE uniqdatahive;* > > > - Inserted around 7000 records into carbon table with 3 runs of the > following query, > > > *insert into table uniqdata select * from uniqdatahive;* > > > - After 7000 records stored in carbon table, the following exception > began to appear in every run of the insert query again and again, > > > 0: jdbc:hive2://hadoop-master:10000> *insert into table uniqdata select * > from uniqdatahive;* > Error: org.apache.spark.SparkException: Job aborted due to stage failure: > Task 1 in stage 42.0 failed 4 times, most recent failure: Lost task 1.3 in > stage 42.0 (TID 125, hadoop-slave-1): > *org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.AlreadyBeingCreatedException)*: > *Failed to APPEND_FILE* /user/hive/warehouse/carbon. > store/hivetest/uniqdata/Metadata/04936ba3-dbb6-45c2-8858-d9ed864034c1.dict > for DFSClient_NONMAPREDUCE_-1303665369_59 on 192.168.2.130 *because > DFSClient_NONMAPREDUCE_-1303665369_59 is already the current lease holder.* > at org.apache.hadoop.hdfs.server.namenode.FSNamesystem. > recoverLeaseInternal(FSNamesystem.java:2882) > at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInternal( > FSNamesystem.java:2683) > at org.apache.hadoop.hdfs.server.namenode.FSNamesystem. > appendFileInt(FSNamesystem.java:2982) > at org.apache.hadoop.hdfs.server.namenode.FSNamesystem. > appendFile(FSNamesystem.java:2950) > at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer. > append(NameNodeRpcServer.java:654) > at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSi > deTranslatorPB.append(ClientNamenodeProtocolServerSi > deTranslatorPB.java:421) > at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ > ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos. > java) > at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call( > ProtobufRpcEngine.java:616) > at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969) > at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049) > at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045) > at java.security.AccessController.doPrivileged(Native Method) > at javax.security.auth.Subject.doAs(Subject.java:422) > at org.apache.hadoop.security.UserGroupInformation.doAs( > UserGroupInformation.java:1657) > at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043) > > *After this we are unable to insert more than 7000 records in the carbon > table from hive table.* > > > Thank You > > > Best Regards | > *Harsh Sharma* > Sr. Software Consultant > Facebook <https://www.facebook.com/harsh.sharma.161446> | Twitter > <https://twitter.com/harsh_sharma5> | Linked In > <https://www.linkedin.com/in/harsh-sharma-0a08a1b0?trk=hp-identity-name> > [hidden email] > Skype*: khandal60* > *+91-8447307237* > |

|

I have met the same problem.

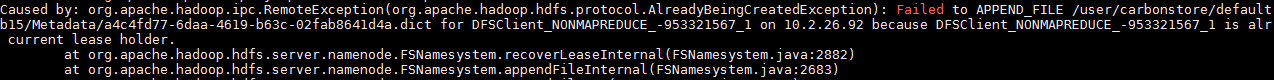

I load data for three times and this exception always throws at the third time. I use the branch-1.0 version from git. Table : cc.sql(s"create table if not exists flightdb15(ID Int, date string, country string, name string, phonetype string, serialname string, salary Int) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.MetadataTypedColumnsetSerDe' STORED BY 'org.apache.carbondata.format' TBLPROPERTIES ('table_blocksize'='256 MB')") Exception:  |

Re: Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

Hi,

Which version of hadoop you are using while compiling the carbondata jar? If you are using hadoop-2.2.0, then please go through the below link which says that there is some issue with hadoop-2.2.0 while writing a file in append mode. http://stackoverflow.com/questions/21655634/hadoop2-2-0-append-file-occur-alreadybeingcreatedexception Regards Manish Gupta On Fri, Jan 20, 2017 at 8:10 AM, ffpeng90 <[hidden email]> wrote: > I have met the same problem. > I load data for three times and this exception always throws at the third > time. > I use the branch-1.0 version from git. > > Table : > cc.sql(s"create table if not exists flightdb15(ID Int, date string, country > string, name string, phonetype string, serialname string, salary Int) ROW > FORMAT SERDE 'org.apache.hadoop.hive.serde2.MetadataTypedColumnsetSerDe' > STORED BY 'org.apache.carbondata.format' TBLPROPERTIES > ('table_blocksize'='256 MB')") > > Exception: > > <http://apache-carbondata-mailing-list-archive.1130556. > n5.nabble.com/file/n6843/bug1.png> > > > > > > -- > View this message in context: http://apache-carbondata- > mailing-list-archive.1130556.n5.nabble.com/Failed-to- > APPEND-FILE-hadoop-hdfs-protocol-AlreadyBeingCreatedException- > tp5433p6843.html > Sent from the Apache CarbonData Mailing List archive mailing list archive > at Nabble.com. > |

|

I build the jar with hadoop2.6, like "mvn package -DskipTests -Pspark-1.5.2 -Phadoop-2.6.0 -DskipTests"

My Spark version is "spark-1.5.2-bin-hadoop2.6" However my hadoop environment is hadoop-2.7.2 At 2017-01-20 15:05:56, "manish gupta" <[hidden email]> wrote: >Hi, > >Which version of hadoop you are using while compiling the carbondata jar? > >If you are using hadoop-2.2.0, then please go through the below link which >says that there is some issue with hadoop-2.2.0 while writing a file in >append mode. > >http://stackoverflow.com/questions/21655634/hadoop2-2-0-append-file-occur-alreadybeingcreatedexception > >Regards >Manish Gupta > >On Fri, Jan 20, 2017 at 8:10 AM, ffpeng90 <[hidden email]> wrote: > >> I have met the same problem. >> I load data for three times and this exception always throws at the third >> time. >> I use the branch-1.0 version from git. >> >> Table : >> cc.sql(s"create table if not exists flightdb15(ID Int, date string, country >> string, name string, phonetype string, serialname string, salary Int) ROW >> FORMAT SERDE 'org.apache.hadoop.hive.serde2.MetadataTypedColumnsetSerDe' >> STORED BY 'org.apache.carbondata.format' TBLPROPERTIES >> ('table_blocksize'='256 MB')") >> >> Exception: >> >> <http://apache-carbondata-mailing-list-archive.1130556. >> n5.nabble.com/file/n6843/bug1.png> >> >> >> >> >> >> -- >> View this message in context: http://apache-carbondata- >> mailing-list-archive.1130556.n5.nabble.com/Failed-to- >> APPEND-FILE-hadoop-hdfs-protocol-AlreadyBeingCreatedException- >> tp5433p6843.html >> Sent from the Apache CarbonData Mailing List archive mailing list archive >> at Nabble.com. >> |

Re: Re: Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

Administrator

|

Hi

mvn -DskipTests -Pspark-1.5 -Dspark.version=1.5.2 clean package Please refer to build doc: https://github.com/apache/incubator-carbondata/tree/master/build Regards Liang 2017-01-20 16:00 GMT+08:00 彭 <[hidden email]>: > I build the jar with hadoop2.6, like "mvn package -DskipTests > -Pspark-1.5.2 -Phadoop-2.6.0 -DskipTests" > My Spark version is "spark-1.5.2-bin-hadoop2.6" > However my hadoop environment is hadoop-2.7.2 > > > > At 2017-01-20 15:05:56, "manish gupta" <[hidden email]> wrote: > >Hi, > > > >Which version of hadoop you are using while compiling the carbondata jar? > > > >If you are using hadoop-2.2.0, then please go through the below link which > >says that there is some issue with hadoop-2.2.0 while writing a file in > >append mode. > > > >http://stackoverflow.com/questions/21655634/hadoop2-2- > 0-append-file-occur-alreadybeingcreatedexception > > > >Regards > >Manish Gupta > > > >On Fri, Jan 20, 2017 at 8:10 AM, ffpeng90 <[hidden email]> wrote: > > > >> I have met the same problem. > >> I load data for three times and this exception always throws at the > third > >> time. > >> I use the branch-1.0 version from git. > >> > >> Table : > >> cc.sql(s"create table if not exists flightdb15(ID Int, date string, > country > >> string, name string, phonetype string, serialname string, salary Int) > ROW > >> FORMAT SERDE 'org.apache.hadoop.hive.serde2. > MetadataTypedColumnsetSerDe' > >> STORED BY 'org.apache.carbondata.format' TBLPROPERTIES > >> ('table_blocksize'='256 MB')") > >> > >> Exception: > >> > >> <http://apache-carbondata-mailing-list-archive.1130556. > >> n5.nabble.com/file/n6843/bug1.png> > >> > >> > >> > >> > >> > >> -- > >> View this message in context: http://apache-carbondata- > >> mailing-list-archive.1130556.n5.nabble.com/Failed-to- > >> APPEND-FILE-hadoop-hdfs-protocol-AlreadyBeingCreatedException- > >> tp5433p6843.html > >> Sent from the Apache CarbonData Mailing List archive mailing list > archive > >> at Nabble.com. > >> > -- Regards Liang |

Re: Re: Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

In reply to this post by ffpeng90

Can you try compiling with hadoop-2.7.2 version and use it and let us know

if the issue still persists. "mvn package -DskipTests -Pspark-1.5.2 -Phadoop-2.7.2 -DskipTests" Regards Manish Gupta On Fri, Jan 20, 2017 at 1:30 PM, 彭 <[hidden email]> wrote: > I build the jar with hadoop2.6, like "mvn package -DskipTests > -Pspark-1.5.2 -Phadoop-2.6.0 -DskipTests" > My Spark version is "spark-1.5.2-bin-hadoop2.6" > However my hadoop environment is hadoop-2.7.2 > > > > At 2017-01-20 15:05:56, "manish gupta" <[hidden email]> wrote: > >Hi, > > > >Which version of hadoop you are using while compiling the carbondata jar? > > > >If you are using hadoop-2.2.0, then please go through the below link which > >says that there is some issue with hadoop-2.2.0 while writing a file in > >append mode. > > > >http://stackoverflow.com/questions/21655634/hadoop2-2- > 0-append-file-occur-alreadybeingcreatedexception > > > >Regards > >Manish Gupta > > > >On Fri, Jan 20, 2017 at 8:10 AM, ffpeng90 <[hidden email]> wrote: > > > >> I have met the same problem. > >> I load data for three times and this exception always throws at the > third > >> time. > >> I use the branch-1.0 version from git. > >> > >> Table : > >> cc.sql(s"create table if not exists flightdb15(ID Int, date string, > country > >> string, name string, phonetype string, serialname string, salary Int) > ROW > >> FORMAT SERDE 'org.apache.hadoop.hive.serde2. > MetadataTypedColumnsetSerDe' > >> STORED BY 'org.apache.carbondata.format' TBLPROPERTIES > >> ('table_blocksize'='256 MB')") > >> > >> Exception: > >> > >> <http://apache-carbondata-mailing-list-archive.1130556. > >> n5.nabble.com/file/n6843/bug1.png> > >> > >> > >> > >> > >> > >> -- > >> View this message in context: http://apache-carbondata- > >> mailing-list-archive.1130556.n5.nabble.com/Failed-to- > >> APPEND-FILE-hadoop-hdfs-protocol-AlreadyBeingCreatedException- > >> tp5433p6843.html > >> Sent from the Apache CarbonData Mailing List archive mailing list > archive > >> at Nabble.com. > >> > |

Re: Re: Failed to APPEND_FILE, hadoop.hdfs.protocol.AlreadyBe

|

Hi,

Please use "mvn clean -DskipTests -Pspark-1.5 -Dspark.version=1.5.2 -Phadoop-2.7.2 package" Regards, Ravindra On 20 January 2017 at 15:42, manish gupta <[hidden email]> wrote: > Can you try compiling with hadoop-2.7.2 version and use it and let us know > if the issue still persists. > > "mvn package -DskipTests -Pspark-1.5.2 -Phadoop-2.7.2 -DskipTests" > > Regards > Manish Gupta > > On Fri, Jan 20, 2017 at 1:30 PM, 彭 <[hidden email]> wrote: > > > I build the jar with hadoop2.6, like "mvn package -DskipTests > > -Pspark-1.5.2 -Phadoop-2.6.0 -DskipTests" > > My Spark version is "spark-1.5.2-bin-hadoop2.6" > > However my hadoop environment is hadoop-2.7.2 > > > > > > > > At 2017-01-20 15:05:56, "manish gupta" <[hidden email]> > wrote: > > >Hi, > > > > > >Which version of hadoop you are using while compiling the carbondata > jar? > > > > > >If you are using hadoop-2.2.0, then please go through the below link > which > > >says that there is some issue with hadoop-2.2.0 while writing a file in > > >append mode. > > > > > >http://stackoverflow.com/questions/21655634/hadoop2-2- > > 0-append-file-occur-alreadybeingcreatedexception > > > > > >Regards > > >Manish Gupta > > > > > >On Fri, Jan 20, 2017 at 8:10 AM, ffpeng90 <[hidden email]> wrote: > > > > > >> I have met the same problem. > > >> I load data for three times and this exception always throws at the > > third > > >> time. > > >> I use the branch-1.0 version from git. > > >> > > >> Table : > > >> cc.sql(s"create table if not exists flightdb15(ID Int, date string, > > country > > >> string, name string, phonetype string, serialname string, salary Int) > > ROW > > >> FORMAT SERDE 'org.apache.hadoop.hive.serde2. > > MetadataTypedColumnsetSerDe' > > >> STORED BY 'org.apache.carbondata.format' TBLPROPERTIES > > >> ('table_blocksize'='256 MB')") > > >> > > >> Exception: > > >> > > >> <http://apache-carbondata-mailing-list-archive.1130556. > > >> n5.nabble.com/file/n6843/bug1.png> > > >> > > >> > > >> > > >> > > >> > > >> -- > > >> View this message in context: http://apache-carbondata- > > >> mailing-list-archive.1130556.n5.nabble.com/Failed-to- > > >> APPEND-FILE-hadoop-hdfs-protocol-AlreadyBeingCreatedException- > > >> tp5433p6843.html > > >> Sent from the Apache CarbonData Mailing List archive mailing list > > archive > > >> at Nabble.com. > > >> > > > -- Thanks & Regards, Ravi |

«

Return to Apache CarbonData Dev Mailing List archive

|

1 view|%1 views

| Free forum by Nabble | Edit this page |