[GitHub] carbondata pull request #2412: [CARBONDATA-2656] Presto vector stream reader...

1234

1234

[GitHub] carbondata pull request #2412: [CARBONDATA-2656] Presto vector stream reader...

|

Github user sv71294 commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2412#discussion_r199070321 --- Diff: integration/presto/src/main/java/org/apache/carbondata/presto/readers/BooleanStreamReader.java --- @@ -17,91 +17,64 @@ package org.apache.carbondata.presto.readers; -import java.io.IOException; - import org.apache.carbondata.core.cache.dictionary.Dictionary; +import org.apache.carbondata.core.metadata.datatype.DataType; import org.apache.carbondata.core.metadata.datatype.DataTypes; +import org.apache.carbondata.core.scan.result.vector.impl.CarbonColumnVectorImpl; import org.apache.carbondata.core.util.DataTypeUtil; import com.facebook.presto.spi.block.Block; import com.facebook.presto.spi.block.BlockBuilder; import com.facebook.presto.spi.block.BlockBuilderStatus; +import com.facebook.presto.spi.type.BooleanType; import com.facebook.presto.spi.type.Type; -public class BooleanStreamReader extends AbstractStreamReader { +public class BooleanStreamReader extends CarbonColumnVectorImpl + implements PrestoVectorBlockBuilder { - private boolean isDictionary; - private Dictionary dictionary; + protected int batchSize; - public BooleanStreamReader() { + protected Type type = BooleanType.BOOLEAN; - } + protected BlockBuilder builder; - public BooleanStreamReader(boolean isDictionary, Dictionary dictionary) { - this.isDictionary = isDictionary; + private Dictionary dictionary; + + public BooleanStreamReader(int batchSize, DataType dataType, Dictionary dictionary) { + super(batchSize, dataType); + this.batchSize = batchSize; + this.builder = type.createBlockBuilder(new BlockBuilderStatus(), batchSize); this.dictionary = dictionary; } - public Block readBlock(Type type) throws IOException { - int numberOfRows = 0; - BlockBuilder builder = null; - if (isVectorReader) { - numberOfRows = batchSize; - builder = type.createBlockBuilder(new BlockBuilderStatus(), numberOfRows); - if (columnVector != null) { - if (isDictionary) { - populateDictionaryVector(type, numberOfRows, builder); - } else { - if (columnVector.anyNullsSet()) { - handleNullInVector(type, numberOfRows, builder); - } else { - populateVector(type, numberOfRows, builder); - } - } - } - } else { - numberOfRows = streamData.length; - builder = type.createBlockBuilder(new BlockBuilderStatus(), numberOfRows); - for (int i = 0; i < numberOfRows; i++) { - type.writeBoolean(builder, byteToBoolean(streamData[i])); - } - } - + @Override public Block buildBlock() { return builder.build(); } - private void handleNullInVector(Type type, int numberOfRows, BlockBuilder builder) { - for (int i = 0; i < numberOfRows; i++) { - if (columnVector.isNullAt(i)) { - builder.appendNull(); - } else { - type.writeBoolean(builder, byteToBoolean(columnVector.getData(i))); - } + @Override public void setBatchSize(int batchSize) { + this.batchSize = batchSize; + } + + @Override public void putInt(int rowId, int value) { + Object data = DataTypeUtil + .getDataBasedOnDataType(dictionary.getDictionaryValueForKey(value), DataTypes.BOOLEAN); + if (data != null) { + type.writeBoolean(builder, (boolean) data); + } else { + builder.appendNull(); } } - private void populateVector(Type type, int numberOfRows, BlockBuilder builder) { --- End diff -- handled by putBoolean --- |

[GitHub] carbondata pull request #2412: [CARBONDATA-2656] Presto vector stream reader...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2412#discussion_r199070375 --- Diff: integration/presto/src/main/java/org/apache/carbondata/presto/readers/BooleanStreamReader.java --- @@ -17,91 +17,64 @@ package org.apache.carbondata.presto.readers; -import java.io.IOException; - import org.apache.carbondata.core.cache.dictionary.Dictionary; +import org.apache.carbondata.core.metadata.datatype.DataType; import org.apache.carbondata.core.metadata.datatype.DataTypes; +import org.apache.carbondata.core.scan.result.vector.impl.CarbonColumnVectorImpl; import org.apache.carbondata.core.util.DataTypeUtil; import com.facebook.presto.spi.block.Block; import com.facebook.presto.spi.block.BlockBuilder; import com.facebook.presto.spi.block.BlockBuilderStatus; +import com.facebook.presto.spi.type.BooleanType; import com.facebook.presto.spi.type.Type; -public class BooleanStreamReader extends AbstractStreamReader { +public class BooleanStreamReader extends CarbonColumnVectorImpl + implements PrestoVectorBlockBuilder { - private boolean isDictionary; - private Dictionary dictionary; + protected int batchSize; - public BooleanStreamReader() { + protected Type type = BooleanType.BOOLEAN; - } + protected BlockBuilder builder; - public BooleanStreamReader(boolean isDictionary, Dictionary dictionary) { - this.isDictionary = isDictionary; + private Dictionary dictionary; + + public BooleanStreamReader(int batchSize, DataType dataType, Dictionary dictionary) { + super(batchSize, dataType); + this.batchSize = batchSize; + this.builder = type.createBlockBuilder(new BlockBuilderStatus(), batchSize); this.dictionary = dictionary; } - public Block readBlock(Type type) throws IOException { - int numberOfRows = 0; - BlockBuilder builder = null; - if (isVectorReader) { - numberOfRows = batchSize; - builder = type.createBlockBuilder(new BlockBuilderStatus(), numberOfRows); - if (columnVector != null) { - if (isDictionary) { - populateDictionaryVector(type, numberOfRows, builder); - } else { - if (columnVector.anyNullsSet()) { - handleNullInVector(type, numberOfRows, builder); - } else { - populateVector(type, numberOfRows, builder); - } - } - } - } else { - numberOfRows = streamData.length; - builder = type.createBlockBuilder(new BlockBuilderStatus(), numberOfRows); - for (int i = 0; i < numberOfRows; i++) { - type.writeBoolean(builder, byteToBoolean(streamData[i])); - } - } - + @Override public Block buildBlock() { return builder.build(); } - private void handleNullInVector(Type type, int numberOfRows, BlockBuilder builder) { - for (int i = 0; i < numberOfRows; i++) { - if (columnVector.isNullAt(i)) { - builder.appendNull(); - } else { - type.writeBoolean(builder, byteToBoolean(columnVector.getData(i))); - } + @Override public void setBatchSize(int batchSize) { + this.batchSize = batchSize; + } + + @Override public void putInt(int rowId, int value) { + Object data = DataTypeUtil + .getDataBasedOnDataType(dictionary.getDictionaryValueForKey(value), DataTypes.BOOLEAN); + if (data != null) { + type.writeBoolean(builder, (boolean) data); + } else { + builder.appendNull(); } } - private void populateVector(Type type, int numberOfRows, BlockBuilder builder) { - for (int i = 0; i < numberOfRows; i++) { - type.writeBoolean(builder, byteToBoolean(columnVector.getData(i))); - } + @Override public void putBoolean(int rowId, boolean value) { + type.writeBoolean(builder, value); } - private void populateDictionaryVector(Type type, int numberOfRows, BlockBuilder builder) { --- End diff -- handled in putInt --- |

[GitHub] carbondata pull request #2412: [CARBONDATA-2656] Presto vector stream reader...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2412#discussion_r199070456 --- Diff: integration/presto/src/main/java/org/apache/carbondata/presto/readers/BooleanStreamReader.java --- @@ -17,91 +17,64 @@ package org.apache.carbondata.presto.readers; -import java.io.IOException; - import org.apache.carbondata.core.cache.dictionary.Dictionary; +import org.apache.carbondata.core.metadata.datatype.DataType; import org.apache.carbondata.core.metadata.datatype.DataTypes; +import org.apache.carbondata.core.scan.result.vector.impl.CarbonColumnVectorImpl; import org.apache.carbondata.core.util.DataTypeUtil; import com.facebook.presto.spi.block.Block; import com.facebook.presto.spi.block.BlockBuilder; import com.facebook.presto.spi.block.BlockBuilderStatus; +import com.facebook.presto.spi.type.BooleanType; import com.facebook.presto.spi.type.Type; -public class BooleanStreamReader extends AbstractStreamReader { +public class BooleanStreamReader extends CarbonColumnVectorImpl + implements PrestoVectorBlockBuilder { - private boolean isDictionary; - private Dictionary dictionary; + protected int batchSize; - public BooleanStreamReader() { + protected Type type = BooleanType.BOOLEAN; - } + protected BlockBuilder builder; - public BooleanStreamReader(boolean isDictionary, Dictionary dictionary) { - this.isDictionary = isDictionary; + private Dictionary dictionary; + + public BooleanStreamReader(int batchSize, DataType dataType, Dictionary dictionary) { + super(batchSize, dataType); + this.batchSize = batchSize; + this.builder = type.createBlockBuilder(new BlockBuilderStatus(), batchSize); this.dictionary = dictionary; } - public Block readBlock(Type type) throws IOException { --- End diff -- builder is created with constructor and insertion function is done within the vector-filling process of CarbonColumnVectorImpl --- |

[GitHub] carbondata pull request #2412: [CARBONDATA-2656] Presto vector stream reader...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2412#discussion_r199083404 --- Diff: integration/presto/src/main/java/org/apache/carbondata/presto/readers/DecimalSliceStreamReader.java --- @@ -45,80 +45,66 @@ /** * Reader for DecimalValues */ -public class DecimalSliceStreamReader extends AbstractStreamReader { +public class DecimalSliceStreamReader extends CarbonColumnVectorImpl + implements PrestoVectorBlockBuilder { + private final char[] buffer = new char[100]; + protected int batchSize; + protected Type type; + protected BlockBuilder builder; private Dictionary dictionary; - private boolean isDictionary; + public DecimalSliceStreamReader(int batchSize, + org.apache.carbondata.core.metadata.datatype.DecimalType dataType, Dictionary dictionary) { + super(batchSize, dataType); + this.type = DecimalType.createDecimalType(dataType.getPrecision(), dataType.getScale()); --- End diff -- here type is decided with the precision value by the presto whether shortDecimal or longDecimal --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

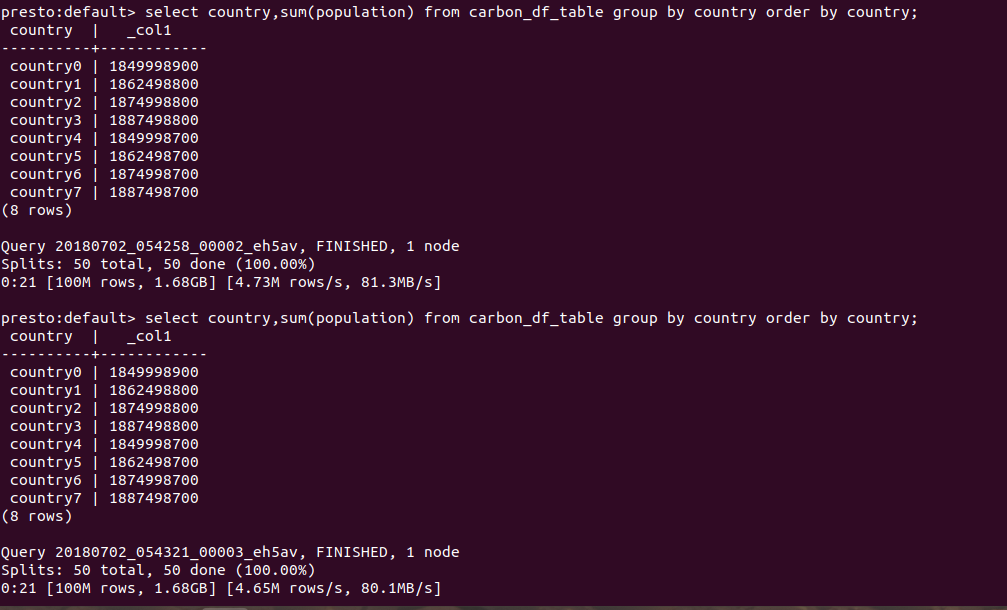

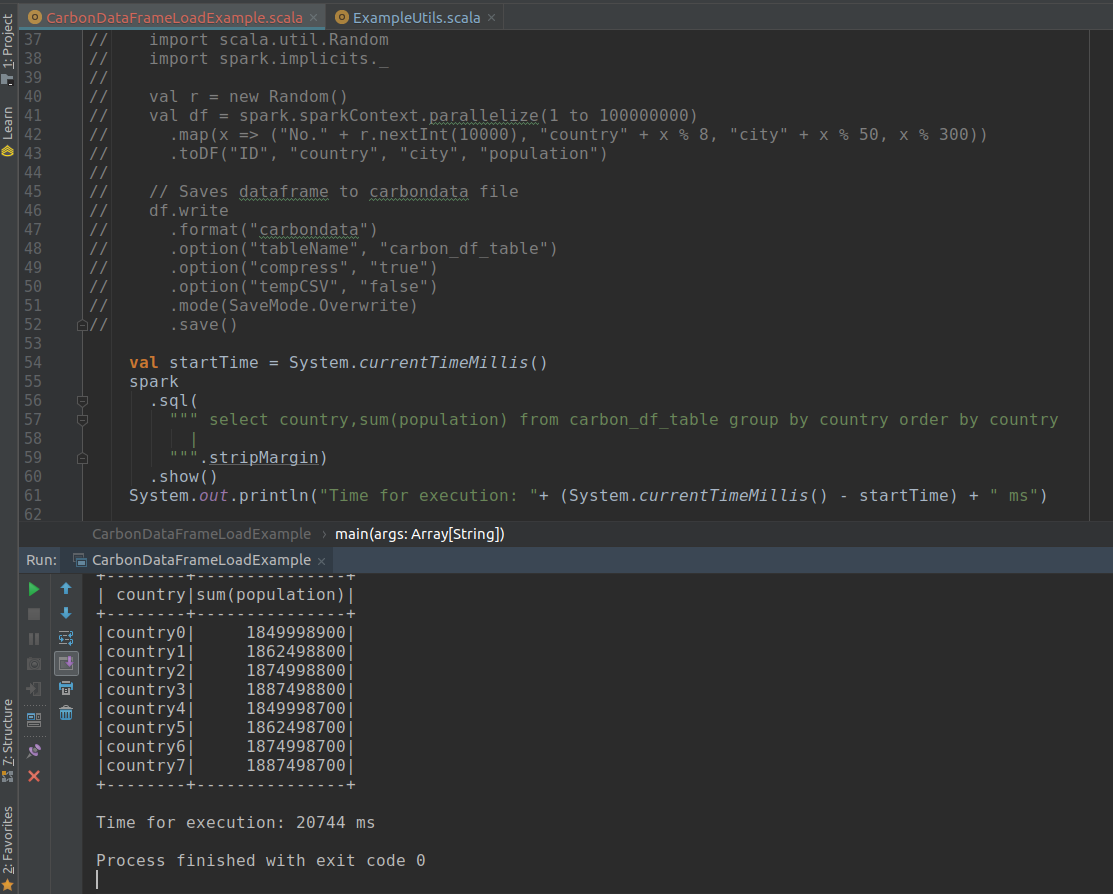

Github user chenliang613 commented on the issue:

https://github.com/apache/carbondata/pull/2412 Used the below script to build data: ``` import scala.util.Random val r = new Random() val df = spark.sparkContext.parallelize(1 to 1000000000).map(x => ("No." + r.nextInt(10000), "country" + x % 8, "city" + x % 50, x % 300)).toDF("ID", "country", "city", "population") ``` Two issues: 1. On presto client, i ran two times as per the below script but get the different results: ``` presto:default> select country,sum(population) from carbon_table group by country; country | _col1 ----------+------------- country4 | 18508531250 country2 | 18758431703 country0 | 18508717865 country7 | 18884021774 country1 | 18633160595 country5 | 18633480022 country6 | 18757895175 country3 | 18883151243 (8 rows) Query 20180630_041406_00004_crn9q, FINISHED, 1 node Splits: 65 total, 65 done (100.00%) 1:01 [1000M rows, 8.4GB] [16.5M rows/s, 142MB/s] presto:default> select country,sum(population) from carbon_table group by country; country | _col1 ----------+------------- country4 | 18500014852 country0 | 18499993972 country5 | 18624989449 country1 | 18625008398 country3 | 18874966666 country6 | 18749995166 country7 | 18874992446 country2 | 18749999687 (8 rows) Query 20180630_041510_00005_crn9q, FINISHED, 1 node Splits: 65 total, 65 done (100.00%) 0:59 [1000M rows, 8.4GB] [17M rows/s, 146MB/s] ``` 2. For aggregation scenarios with 1 billion row data, presto performance is much lower than spark, as below: (presto is around 1 mins, spark is around 33 seconds) ``` scala> benchmark { carbon.sql("select country,sum(population) from carbon_table group by country").show} +--------+---------------+ | country|sum(population)| +--------+---------------+ |country4| 18499998700| |country1| 18624998800| |country3| 18874998800| |country7| 18874998700| |country2| 18749998800| |country6| 18749998700| |country5| 18624998700| |country0| 18499998900| +--------+---------------+ 33849.999703ms ``` --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on the issue:

https://github.com/apache/carbondata/pull/2412 Hi Liang, I tried to reproduce the same issue, with provided script, I created the table and executed the SQL. I am getting the same result with SPARK as well as with PRESTO (within almost same time), please check the attached screenshot.   --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user chenliang613 commented on the issue:

https://github.com/apache/carbondata/pull/2412 @sv71294 After setting the below two parameters, my side's test result be same. but the performance need to check. enable.unsafe.columnpage=false enable.unsafe.sort=false --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Failed with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/5787/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on the issue:

https://github.com/apache/carbondata/pull/2412 retest this please --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Success with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/5788/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Failed with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7011/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2412 SDV Build Fail , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5760/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user sv71294 commented on the issue:

https://github.com/apache/carbondata/pull/2412 retest this please --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2412 SDV Build Fail , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5761/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2412 SDV Build Success , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5763/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Success with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7017/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Success with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/5797/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Success with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7106/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2412 Build Success with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/5882/ --- |

[GitHub] carbondata issue #2412: [CARBONDATA-2656] Presto vector stream readers perfo...

|

In reply to this post by qiuchenjian-2

Github user chenliang613 commented on the issue:

https://github.com/apache/carbondata/pull/2412 LGTM, verified, this pr can improve the performance by 20%. Thanks for the contribution. --- |

«

Return to Apache CarbonData JIRA issues

|

1 view|%1 views

| Free forum by Nabble | Edit this page |