carbondata partitioned by date generate many small files

|

hi carbondata team,

i am using carbondata 1.3.1 to create table and import data, generated many small files and spark job is very slow, i suspected the number of file is related to the number of spark job . but if i decrease the jobs, job will fail because of outofmemory. see my ddl as below: create table xx.xx( dept_name string, xx . . . ) PARTITIONED BY (xxx date) STORED BY 'carbondata' TBLPROPERTIES('SORT_COLUMNS'='xxx,xxx,xxx ,xxx,xxx') please give some advice. thanks ChenXingYu |

|

Hi, There is a testcase in StandardPartitionTableQueryTestCase used date column as partition column, if you run that testcase, the partition folder generated looks like following picture.  Are you getting similar folders? Regards, Jacky

|

|

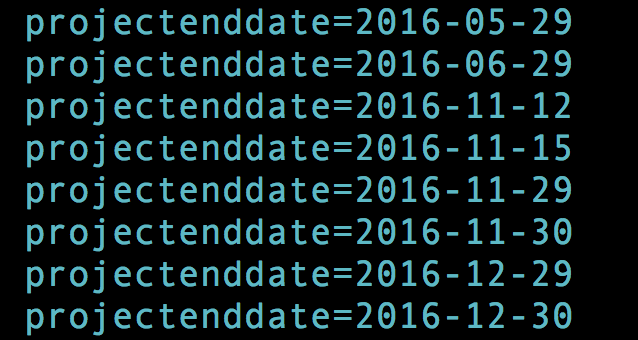

hi Li, Yes,i got the partition folder as you say, but under the partition folder ,there are many small file just like following picture, How to merge then automatically after jobs done. thanks ChenXingYu ------------------ Original ------------------ From: "Jacky Li"<[hidden email]>; Date: Tue, Jun 5, 2018 08:43 PM To: "dev"<[hidden email]>; Subject: Re: carbondata partitioned by date generate many small files Hi, There is a testcase in StandardPartitionTableQueryTestCase used date column as partition column, if you run that testcase, the partition folder generated looks like following picture. Are you getting similar folders? Regards, Jacky

|

|

Hi,

I couldn’t see the picture you sent, can you send a text of it? Regards, Jacky > 在 2018年6月6日,上午9:46,陈星宇 <[hidden email]> 写道: > > hi Li, > Yes,i got the partition folder as you say, but under the partition folder ,there are many small file just like following picture, > How to merge then automatically after jobs done. > > > thanks > > ChenXingYu > > > ------------------ Original ------------------ > From: "Jacky Li"<[hidden email]>; > Date: Tue, Jun 5, 2018 08:43 PM > To: "dev"<[hidden email]>; > Subject: Re: carbondata partitioned by date generate many small files > > Hi, > > There is a testcase in StandardPartitionTableQueryTestCase used date column as partition column, if you run that testcase, the partition folder generated looks like following picture. > > > Are you getting similar folders? > > Regards, > Jacky > >> 在 2018年6月5日,下午2:49,陈星宇 <[hidden email] <mailto:[hidden email]>> 写道: >> >> hi carbondata team, >> >> >> i am using carbondata 1.3.1 to create table and import data, generated many small files and spark job is very slow, i suspected the number of file is related to the number of spark job . but if i decrease the jobs, job will fail because of outofmemory. see my ddl as below: >> >> >> create table xx.xx( >> dept_name string, >> xx >> . >> . >> . >> ) PARTITIONED BY (xxx date) >> STORED BY 'carbondata' TBLPROPERTIES('SORT_COLUMNS'='xxx,xxx,xxx ,xxx,xxx') >> >> >> >> please give some advice. >> >> >> thanks >> >> >> ChenXingYu > |

|

hi Jacky,

see my file list as below. it generated lots of small file. how to merge them 8.6 K 25.9 K /xx/partition_date=2018-05-10/101100620100001_batchno0-0-1529497245506.carbonindex 4.6 K 13.9 K /xx/partition_date=2018-05-10/101100621100003_batchno0-0-1529497245506.carbonindex 4.6 K 13.9 K /xx/partition_date=2018-05-10/101100626100003_batchno0-0-1529497245506.carbonindex 4.6 K 13.8 K /xx/partition_date=2018-05-10/101100636100007_batchno0-0-1529497245506.carbonindex 4.6 K 13.7 K /xx/partition_date=2018-05-10/101100637100011_batchno0-0-1529497245506.carbonindex 4.6 K 13.7 K /xx/partition_date=2018-05-10/101100641100005_batchno0-0-1529497245506.carbonindex 4.7 K 14.1 K /xx/partition_date=2018-05-10/101100648100009_batchno0-0-1529497245506.carbonindex 6.0 K 18.1 K /xx/partition_date=2018-05-10/101100649100002_batchno0-0-1529497245506.carbonindex 885.6 K 2.6 M /xx/partition_date=2018-05-10/part-0-100100035100009_batchno0-0-1529495933936.carbondata 6.4 M 19.3 M /xx/partition_date=2018-05-10/part-0-100100052100013_batchno0-0-1529495933936.carbondata 11.6 M 34.9 M /xx/partition_date=2018-05-10/part-0-100100077100011_batchno0-0-1529495933936.carbondata 427.4 K 1.3 M /xx/partition_date=2018-05-10/part-0-100100079100003_batchno0-0-1529495933936.carbondata 5.2 M 15.5 M /xx/partition_date=2018-05-10/part-0-100100089100010_batchno0-0-1529495933936.carbondata 16.4 M 49.3 M /xx/partition_date=2018-05-10/part-0-100100123100008_batchno0-0-1529495933936.carbondata 6.0 M 18.1 M /xx/partition_date=2018-05-10/part-0-100100134100006_batchno0-0-1529495933936.carbondata 9.6 M 28.9 M /xx/partition_date=2018-05-10/part-0-100100144100006_batchno0-0-1529495933936.carbondata 28.7 M 86.2 M /xx/partition_date=2018-05-10/part-0-100100145100001_batchno0-0-1529495933936.carbondata 11.7 K 35.0 K /xx/partition_date=2018-05-10/part-0-100100168100040_batchno0-0-1529495933936.carbondata chenxingyu ------------------ Original ------------------ From: "Jacky Li"<[hidden email]>; Date: Fri, Jun 8, 2018 04:07 PM To: "dev"<[hidden email]>; Subject: Re: carbondata partitioned by date generate many small files Hi, I couldn’t see the picture you sent, can you send a text of it? Regards, Jacky > 在 2018年6月6日,上午9:46,陈星宇 <[hidden email]> 写道: > > hi Li, > Yes,i got the partition folder as you say, but under the partition folder ,there are many small file just like following picture, > How to merge then automatically after jobs done. > > > thanks > > ChenXingYu > > > ------------------ Original ------------------ > From: "Jacky Li"<[hidden email]>; > Date: Tue, Jun 5, 2018 08:43 PM > To: "dev"<[hidden email]>; > Subject: Re: carbondata partitioned by date generate many small files > > Hi, > > There is a testcase in StandardPartitionTableQueryTestCase used date column as partition column, if you run that testcase, the partition folder generated looks like following picture. > > > Are you getting similar folders? > > Regards, > Jacky > >> 在 2018年6月5日,下午2:49,陈星宇 <[hidden email] <mailto:[hidden email]>> 写道: >> >> hi carbondata team, >> >> >> i am using carbondata 1.3.1 to create table and import data, generated many small files and spark job is very slow, i suspected the number of file is related to the number of spark job . but if i decrease the jobs, job will fail because of outofmemory. see my ddl as below: >> >> >> create table xx.xx( >> dept_name string, >> xx >> . >> . >> . >> ) PARTITIONED BY (xxx date) >> STORED BY 'carbondata' TBLPROPERTIES('SORT_COLUMNS'='xxx,xxx,xxx ,xxx,xxx') >> >> >> >> please give some advice. >> >> >> thanks >> >> >> ChenXingYu > |

«

Return to Apache CarbonData Dev Mailing List archive

|

1 view|%1 views

| Free forum by Nabble | Edit this page |