Re: carbondata partitioned by date generate many small files

Posted by Jacky Li on Jun 05, 2018; 12:43pm

URL: http://apache-carbondata-dev-mailing-list-archive.168.s1.nabble.com/carbondata-partitioned-by-date-generate-many-small-files-tp51475p51511.html

URL: http://apache-carbondata-dev-mailing-list-archive.168.s1.nabble.com/carbondata-partitioned-by-date-generate-many-small-files-tp51475p51511.html

Hi,

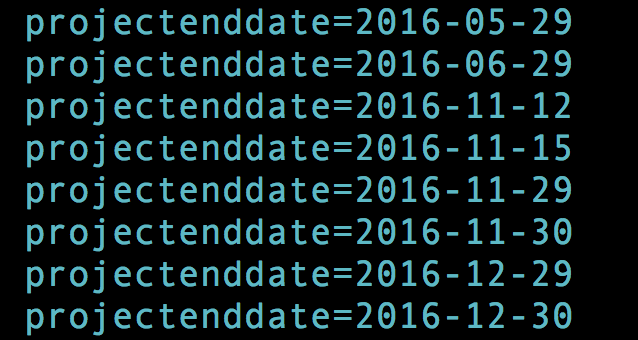

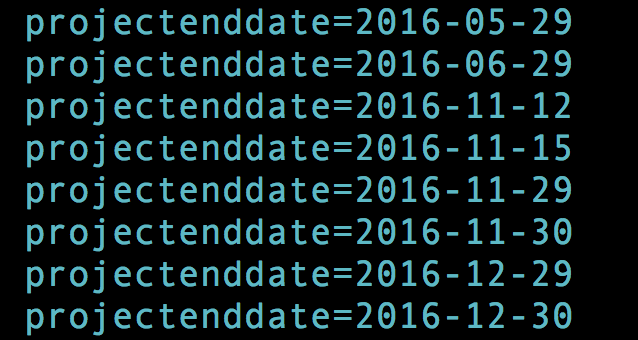

There is a testcase in StandardPartitionTableQueryTestCase used date column as partition column, if you run that testcase, the partition folder generated looks like following picture.

Are you getting similar folders?

Regards,

Jacky

在 2018年6月5日,下午2:49,陈星宇 <[hidden email]> 写道:hi carbondata team,

i am using carbondata 1.3.1 to create table and import data, generated many small files and spark job is very slow, i suspected the number of file is related to the number of spark job . but if i decrease the jobs, job will fail because of outofmemory. see my ddl as below:

create table xx.xx(

dept_name string,

xx

.

.

.

) PARTITIONED BY (xxx date)

STORED BY 'carbondata' TBLPROPERTIES('SORT_COLUMNS'='xxx,xxx,xxx ,xxx,xxx')

please give some advice.

thanks

ChenXingYu

| Free forum by Nabble | Edit this page |