[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

12

12

[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

|

GitHub user ravipesala opened a pull request:

https://github.com/apache/carbondata/pull/2503 [CARBONDATA-2734] Update is not working on the table which has segmentfile present It reverts to the PR https://github.com/apache/carbondata/pull/2385 It fixes the IUD on the flat folder. Be sure to do all of the following checklist to help us incorporate your contribution quickly and easily: - [ ] Any interfaces changed? - [ ] Any backward compatibility impacted? - [ ] Document update required? - [ ] Testing done Please provide details on - Whether new unit test cases have been added or why no new tests are required? - How it is tested? Please attach test report. - Is it a performance related change? Please attach the performance test report. - Any additional information to help reviewers in testing this change. - [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA. You can merge this pull request into a Git repository by running: $ git pull https://github.com/ravipesala/incubator-carbondata flat-folder-update-issue Alternatively you can review and apply these changes as the patch at: https://github.com/apache/carbondata/pull/2503.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #2503 ---- commit caf2304736741748e6ba896d5ba29333ee95defc Author: ravipesala <ravi.pesala@...> Date: 2018-07-13T07:45:15Z Update is not working on the table which has segmentfile present ---- --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2503 SDV Build Success , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5822/ --- |

[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

|

In reply to this post by qiuchenjian-2

Github user jackylk commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2503#discussion_r202508061 --- Diff: integration/spark2/src/main/scala/org/apache/spark/sql/execution/command/mutation/DeleteExecution.scala --- @@ -134,7 +134,7 @@ object DeleteExecution { groupedRows.toIterator, timestamp, rowCountDetailsVO, - carbonTable.isHivePartitionTable) + segmentFile) --- End diff -- Is it better to pass SegmentStatus instead of string, to have better encapsulation --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Failed with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7170/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Failed with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/5946/ --- |

[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2503#discussion_r202945784 --- Diff: integration/spark2/src/main/scala/org/apache/spark/sql/execution/command/mutation/DeleteExecution.scala --- @@ -134,7 +134,7 @@ object DeleteExecution { groupedRows.toIterator, timestamp, rowCountDetailsVO, - carbonTable.isHivePartitionTable) + segmentFile) --- End diff -- Ok, But passed Segment --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Failed with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/6015/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Failed with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7252/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Success with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7284/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Success with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/6051/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2503 SDV Build Success , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5913/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user manishgupta88 commented on the issue:

https://github.com/apache/carbondata/pull/2503 @ravipesala ...Please correct me if my understanding is not correct. SegmentFileName != null check was introduced at the time when partition feature was developed to distinguish between partition and non partition table. Now segment file is written even if is a normal table. So using this check is not correct. I think better we can write one generic method and pass carbonTable instance as argument to it and check for hive partiton table/supportFlatFolder/non transactional table. On this condition we can use if and else block and call the respective methods  --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2503 @manishgupta88 `SegmentFileName != null ` check is added only to distinguish legacy or not, not for partition table. if the segment is new then segmentfile will be present so that carbondata path can be taken relative to the table path and generate blockid. I feel it is always better to take the carbondata file path relative to the tablepath to avoid these types of checks otherwise whenever we change the path we have to update the code. --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user manishgupta88 commented on the issue:

https://github.com/apache/carbondata/pull/2503 @ravipesala ...I got your point that we should always take the path relative to tablePath. But problem with using CarbonTablePath.getShortBlockIdForPartitionTable is even in case of normal carbonTable, if you try to get the tupledId using select query, it will give some 15-20 characters extra and if we want to store this tuple ID then space will increase. So we need to find some way to shorten this ID. For normal carbon table, TupleId value using method **getShortBlockIdForPartitionTable** --> Fact#Part0#Segment_0/0/0-0_batchno0-0-0-1532066017077/0/0/0 **getShortBlockId** and correcting **CarbonUtil.getBlockId** --> 0/0/0-0_batchno0-0-0-1532069720380/0/0/0 --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2503 @manishgupta88 Ok, done the necessary changes --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Success with Spark 2.1.0, Please check CI http://136.243.101.176:8080/job/ApacheCarbonPRBuilder1/7363/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user CarbonDataQA commented on the issue:

https://github.com/apache/carbondata/pull/2503 Build Success with Spark 2.2.1, Please check CI http://88.99.58.216:8080/job/ApacheCarbonPRBuilder/6124/ --- |

[GitHub] carbondata issue #2503: [CARBONDATA-2734] Update is not working on the table...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on the issue:

https://github.com/apache/carbondata/pull/2503 SDV Build Success , Please check CI http://144.76.159.231:8080/job/ApacheSDVTests/5939/ --- |

[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

|

In reply to this post by qiuchenjian-2

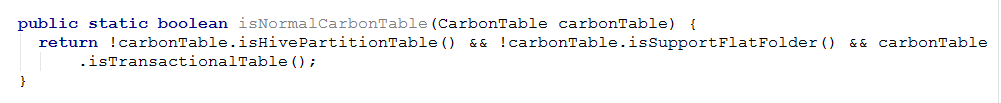

Github user manishgupta88 commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2503#discussion_r204202741 --- Diff: core/src/main/java/org/apache/carbondata/core/util/CarbonUtil.java --- @@ -3235,4 +3235,17 @@ public boolean accept(CarbonFile file) { int version = fileHeader.getVersion(); return ColumnarFormatVersion.valueOf((short)version); } + + /** + * Check whether it is standard table means tablepath has Fact/Part0/Segment_ tail present with + * all carbon files. In other cases carbon files present directly under tablepath or + * tablepath/partition folder + * TODO Read segment file and corresponding index file to get the correct carbondata file instead + * of using this way. + * @param table + * @return + */ + public static boolean isStandardCarbonTable(CarbonTable table) { + return !(table.isSupportFlatFolder() || table.isHivePartitionTable()); --- End diff -- Please add a check for sdk case also CarbonTable.isTransactionalTable() --- |

[GitHub] carbondata pull request #2503: [CARBONDATA-2734] Update is not working on th...

|

In reply to this post by qiuchenjian-2

Github user ravipesala commented on a diff in the pull request:

https://github.com/apache/carbondata/pull/2503#discussion_r204213984 --- Diff: core/src/main/java/org/apache/carbondata/core/util/CarbonUtil.java --- @@ -3235,4 +3235,17 @@ public boolean accept(CarbonFile file) { int version = fileHeader.getVersion(); return ColumnarFormatVersion.valueOf((short)version); } + + /** + * Check whether it is standard table means tablepath has Fact/Part0/Segment_ tail present with + * all carbon files. In other cases carbon files present directly under tablepath or + * tablepath/partition folder + * TODO Read segment file and corresponding index file to get the correct carbondata file instead + * of using this way. + * @param table + * @return + */ + public static boolean isStandardCarbonTable(CarbonTable table) { + return !(table.isSupportFlatFolder() || table.isHivePartitionTable()); --- End diff -- This check is not valid here as some places they are explicitly checking it. --- |

«

Return to Apache CarbonData JIRA issues

|

1 view|%1 views

| Free forum by Nabble | Edit this page |