[DISCUSSION] CarbonData Integration with Presto

|

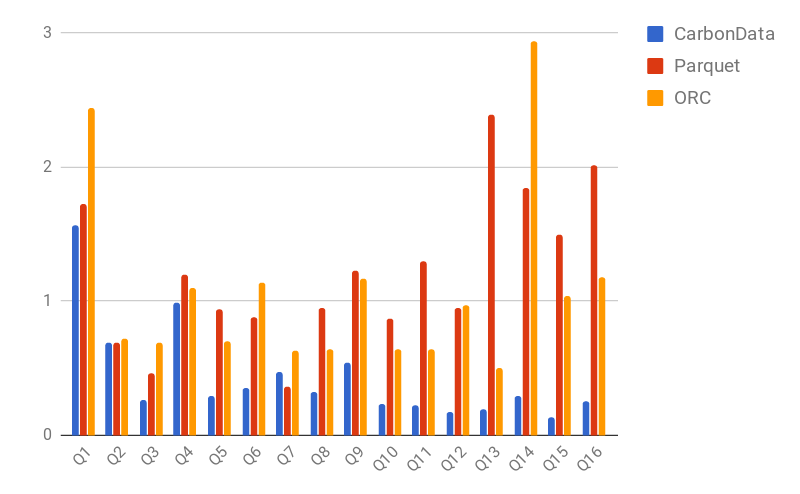

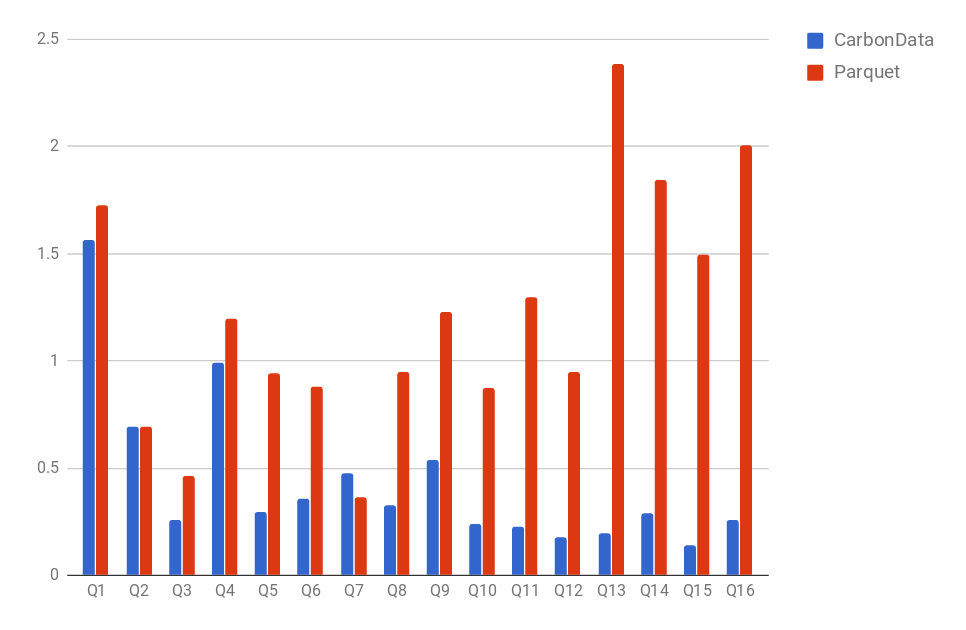

Question : We were able to run only 16 query set out of 22 query set as 2 query set involved temporary tables and 4 query set involved Views which are also not supported by Carbondata. Is there a way to run all the queries. Environment Cluster : 3 Node Cluster (48 GB RAM , 8 CPU Core and 2 TB hard-disk each) Data Data Set : 50 GB data was generated using TPCH 2.17.2 (Schema is attached)The Carbon Data is performing better than Parquet , but since I have to make a decision on it we are trying to do benchmarking on more data and also compare it with ORC.  |

|

Wonderful! The performance of Carbondata is much better than Parquet. Regards. Chenerlu. |

|

In reply to this post by bhavya411

Can you give me your all configuration files (etc/) of coordinate and worker, What configuration tuning did you make for carbondata?

A lot of scenes in our company are using Presto,I have tested presto read orc vs carbondata , Carbondata is clearly inferior to Orc; During the testing, for performance reasons, we used replica join in particular, and we increased the JVM codecache size. but these are equally fair to both ORC and carbondata Carbondata_vs_ORC_on_Presto_Benchmark_testing.docx |

|

Hi, Please find the configuration setting that we used attached with this email , we are running Presto Server 0.179 for our testing.On Fri, Jun 30, 2017 at 8:23 AM, linqer <[hidden email]> wrote: Can you give me your all configuration files (etc/) of coordinate and worker, |

Re: [DISCUSSION] CarbonData Integration with Presto

|

Hi Bhavya

Currently, 1.2.0 propose to support presto version with 0.166. Is there any performance difference between 0.179 and 0.166? Regards Liang 2017-07-01 13:12 GMT+08:00 Bhavya Aggarwal <[hidden email]>: > Hi, > > Please find the configuration setting that we used attached with this > email , we are running Presto Server 0.179 for our testing. > > Thanks and regards > Bhavya > > On Fri, Jun 30, 2017 at 8:23 AM, linqer <[hidden email]> wrote: > >> Can you give me your all configuration files (etc/) of coordinate and >> worker, >> What configuration tuning did you make for carbondata? >> >> A lot of scenes in our company are using Presto,I have tested presto read >> orc vs carbondata , Carbondata is clearly inferior to Orc; >> >> During the testing, for performance reasons, we used replica join in >> particular, and we increased the JVM codecache size. but these are equally >> fair to both ORC and carbondata >> >> Carbondata_vs_ORC_on_Presto_Benchmark_testing.docx >> <http://apache-carbondata-dev-mailing-list-archive.1130556.n >> 5.nabble.com/file/n16849/Carbondata_vs_ORC_on_Presto_Benchma >> rk_testing.docx> >> >> >> >> >> >> >> -- >> View this message in context: http://apache-carbondata-dev-m >> ailing-list-archive.1130556.n5.nabble.com/DISCUSSION-CarbonD >> ata-Integration-with-Presto-tp16793p16849.html >> Sent from the Apache CarbonData Dev Mailing List archive mailing list >> archive at Nabble.com. >> > > |

|

In reply to this post by bhavya411

Hi

1 you can set -XX:ReservedCodeCacheSize in JVM.propertes, make it big 2 I don't see your discovery.uri IP, make user Master act as coordinate and discovery server; 3 remove query.max-memory-per-node firstly. 4 you can test orc, parquet is not support very well on presto. thanks |

|

In reply to this post by bhavya411

for your first testing, What configuration tuning did you make for carbondata? and could you provide a detailed testing report including create table sqls, the row numbers of tables etc.

|

|

In reply to this post by Liang Chen-2

Hi Liang,

We went through the documentation of Presto and there were some issues with Presto 0.166 version which were resolved in later versions. There is a lot of performance improvement in 0.166 and 0.179 as the ways joins are interpreted in Presto are different from 0.166. Please see below for two most important reasons why we choose to ran it on 0.179. 1. Fixed issue which could cause incorrect results when processing dictionary encoded data. If the expression can fail on bad input, the results from filtered-out rows containing bad input may be included in the query output. 2. The order in which joins are executed in a query can have a significant impact on the query’s performance. The aspect of join ordering that has the largest impact on performance is the size of the data being processed and passed over the network. If a join is not a primary key-foreign key join, the data produced can be much greater than the size of either table in the join– up to |Table 1| x |Table 2| for a cross join. If a join that produces a lot of data is performed early in the execution, then subsequent stages will need to process large amounts of data for longer than necessary, increasing the time and resources needed for the query causing query failure. This issue has been fixed in the presto-version 11.3. Release 0.178 onwards. Thanks and regards Bhavya On Sun, Jul 2, 2017 at 10:41 AM, Liang Chen <[hidden email]> wrote: > Hi Bhavya > > Currently, 1.2.0 propose to support presto version with 0.166. > Is there any performance difference between 0.179 and 0.166? > > Regards > Liang > > > 2017-07-01 13:12 GMT+08:00 Bhavya Aggarwal <[hidden email]>: > > > Hi, > > > > Please find the configuration setting that we used attached with this > > email , we are running Presto Server 0.179 for our testing. > > > > Thanks and regards > > Bhavya > > > > On Fri, Jun 30, 2017 at 8:23 AM, linqer <[hidden email]> wrote: > > > >> Can you give me your all configuration files (etc/) of coordinate and > >> worker, > >> What configuration tuning did you make for carbondata? > >> > >> A lot of scenes in our company are using Presto,I have tested presto > read > >> orc vs carbondata , Carbondata is clearly inferior to Orc; > >> > >> During the testing, for performance reasons, we used replica join in > >> particular, and we increased the JVM codecache size. but these are > equally > >> fair to both ORC and carbondata > >> > >> Carbondata_vs_ORC_on_Presto_Benchmark_testing.docx > >> <http://apache-carbondata-dev-mailing-list-archive.1130556.n > >> 5.nabble.com/file/n16849/Carbondata_vs_ORC_on_Presto_Benchma > >> rk_testing.docx> > >> > >> > >> > >> > >> > >> > >> -- > >> View this message in context: http://apache-carbondata-dev-m > >> ailing-list-archive.1130556.n5.nabble.com/DISCUSSION-CarbonD > >> ata-Integration-with-Presto-tp16793p16849.html > >> Sent from the Apache CarbonData Dev Mailing List archive mailing list > >> archive at Nabble.com. > >> > > > > > |

|

In reply to this post by linqer

Thanks Linquer, I will try with the above option and we tried it with ORC as well , here are the comparison results for same query with 50 GB of data and same configuration that I mentioned earlier.On Mon, Jul 3, 2017 at 9:39 AM, linqer <[hidden email]> wrote: Hi |

|

Please see the image as there was some problem with the image in previous email. Thanks and regards Bhavya On Mon, Jul 3, 2017 at 11:45 AM, Bhavya Aggarwal <[hidden email]> wrote:

|

|

hi, did you test orc vs carbondata with configuration as I suggested?

|

|

I am currently loading 500 GB data once that is completed will try with

your settings today itself. Regards Bhavya On 06-Jul-2017 8:39 am, "linqer" <[hidden email]> wrote: > hi, did you test orc vs carbondata with configuration as I suggested? > > > > -- > View this message in context: http://apache-carbondata-dev- > mailing-list-archive.1130556.n5.nabble.com/DISCUSSION- > CarbonData-Integration-with-Presto-tp16793p17419.html > Sent from the Apache CarbonData Dev Mailing List archive mailing list > archive at Nabble.com. > |

|

In reply to this post by bhavya411

Hi Linquer, I am trying to run with the configuration that you suggested on 500 GB data , can you please verify the below properties. config.queries coordinator=true node-scheduler.include-coordinator=true http-server.http.port=8086 query.max-memory=5GB #query.max-memory-per-node=1GB discovery-server.enabled=true discovery.uri=http://xx.xx.xx.xx:8086 jvm.config -server -Xmx16G -XX:+UseG1GC -XX:G1HeapRegionSize=32M -XX:+UseGCOverheadLimit -XX:+ExplicitGCInvokesConcurrent -XX:+HeapDumpOnOutOfMemoryError -XX:OnOutOfMemoryError=kill -9 %p -XX:ReservedCodeCacheSize=1G Regards Bhavya On Mon, Jul 3, 2017 at 11:45 AM, Bhavya Aggarwal <[hidden email]> wrote:

|

|

there is no obvious issue in your configuration, did you have finish testing on ORC?

|

|

you can add

-XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintGCDetails -Xloggc:/var/presto/data/var/log/presto-gc.log into you jvm.properties, if you think there is a gc issue , you can check the GC logs. |

«

Return to Apache CarbonData Dev Mailing List archive

|

1 view|%1 views

| Free forum by Nabble | Edit this page |